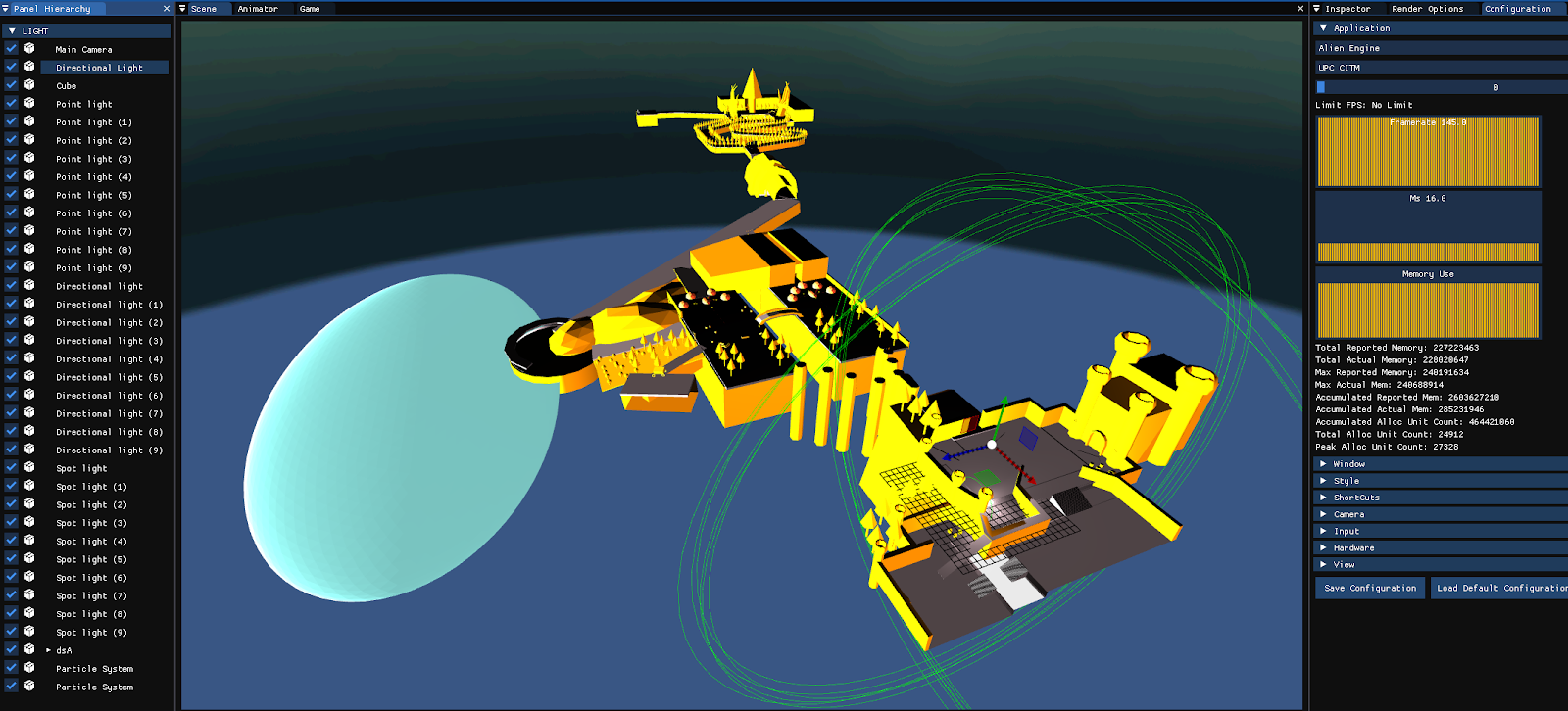

Project overview

Hello there! I'm Sebastià López, and I am a member of the Coding Team of this project.

Most of my work has been put on the engine (also known by the team as "The Boiler Room") and I've found my place in there. During this project, I've realised I really like Engine programming and it's what I will probably focus on my coding career.

Magical Muffin

Magical Muffin